Another task that many developers face when working with Azure Storage is moving files between accounts. Many clients upload files to the cloud, process them, and then store the original in cold storage, which makes it cheaper. In this article I’ll tell you how to transfer blobs between accounts with the help of Azure Functions and Event Grid.

Today, there is no “move” action as such, programmatically speaking, but it’s necessary to carry out the two actions that imply a movement per se, that is: copy the file to the destination and, subsequently, remove it at the source.

There can be many reasons for copying a file and that can be achieved in a number of ways. For this example, I'm going to use a script in Python and the trigger for Event Grid so that simply leaving the file in a storage account starts the whole process.

The example code is as follows:

As you can see, once the event has been captured by the function, the first thing it does is display the information that comes as part of the event. I have installed the azure.storage.blob module to create two clients: one that uses the connection string from the source and one from the destination. I need the one from the origin because I have my blobs in private containers and I need to create a token for their access. The destination client is necessary to make the copy.

The information about the blob you need to move is taken from the url property, which comes as part of the event, and then I create a container in the destination ending in -archived (I have also included this value as another environment variable, in case you want to use another name). Once this is done, I start the copy of the blob, which is done by the Azure Storage service itself asynchronously.

In the file requirements.txt I have added the following modules:

These will be used by both the copy function and the delete function, which I will show you later.

Event Grid subscription to the Origin account

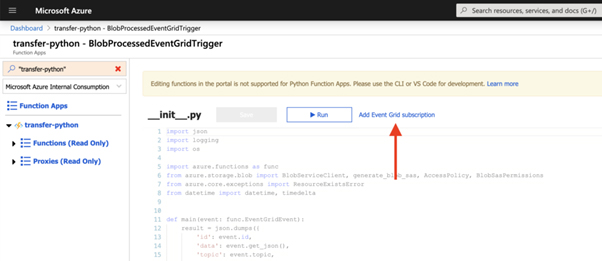

Once you deploy your new feature to your Azure Functions service, the next thing you need to do is create an Event Grid subscription associated with the storage account you want to monitor. The easiest way to create this subscription is through the portal, selecting the function that you have just deployed and clicking on the Add Event Grid Subscription link.

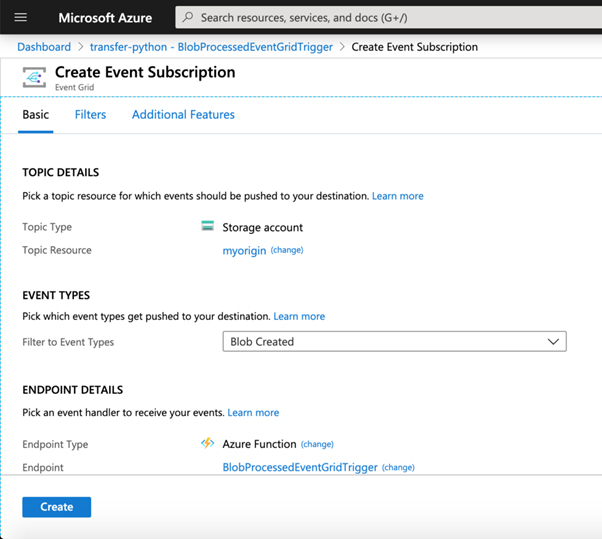

In the wizard you must choose the type of resource, in this case Azure Storage Accounts, the subscription, the resource group and the source storage account. On the other hand, select only the Blob Created event type.

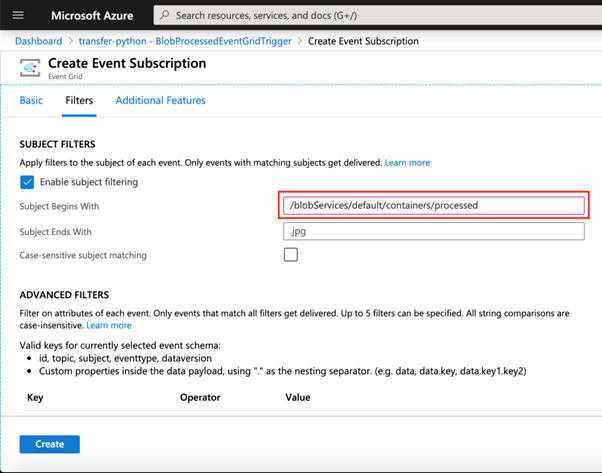

You can trigger the event for any blob created within that account, or you can precisely specify addresses through the Filters section. For example, you could make only the blobs created in the processed folder trigger the invocation of this function, using this format / blobServices / default / containers / processed in Subject Begins With.

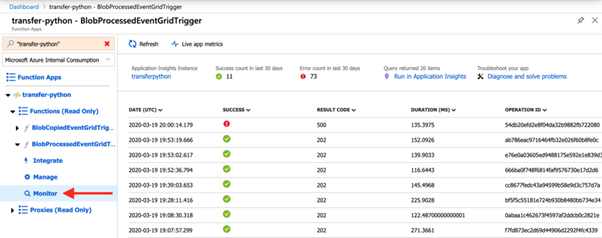

Once the subscription is created, you can prove that uploading a file to the processed container in the source storage account will trigger your new function. You can see all the executions in the Monitor section, although remember that it can take up to 5 minutes for this event to appear.

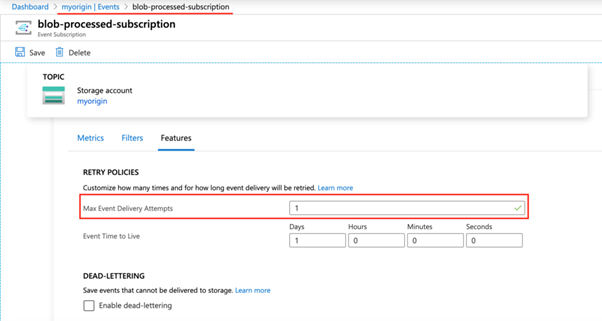

Occasionally, you may find that the function does not execute correctly for different reasons (the file has the wrong name for a blob, the code is wrong, etc.). This doesn’t allow the captured event to be marked as processed. By default, the number of retries is 30 and this can cause a number of calls to occur for a file or scenario that we have not taken into account. For this reason, it is also possible to control the number of retries per event. During the subscription creation, you can configure it in the Additional Features section, or you can modify the existing subscription through the storage account, in the Events section by selecting the subscription that you created previously. Then in the Features section you can modify the number of retries, among other values:

Deletion of the original file after copy

If, before continuing with the article, you have tested your function, the uploaded file should have been copied to the storage account you have chosen as the destination, in a container named source_container_name-archived. Ideally, this account should be type cold, if what you want is to store the original files, whilst your intention is to rarely access them again.

The last thing you have left to do, is the deletion of the original file in the source account. To do this, I have used Event Grid again as a mechanism to detect when a new blob has been created:

In this case, you must follow the same procedure to subscribe to the blob creation event, but in the destination account. In the code, the first thing I do is establish a one-day retention on the source account so that when I delete it, on the following lines, a soft blob occurs instead of a total deletion, in case it is necessary to recover it in a graceful period.

The example code is in my GitHub Account

Cheers!