This is the third post of a collection of GitOps tools articles. In this post, a continuous integration and continuous deployment tool for Kubernetes is reviewed: Werf. If you missed previous articles on GitOps tools, you could give them a read, as it will help you better understanding the general idea. ArgoCD and FluxCD, were the previously reviewed tools.

As for the previous tools, an example repository has been developed so that you can test Werf with some existing configurations. You can find the repository here.

GitOps & Werf ⛵

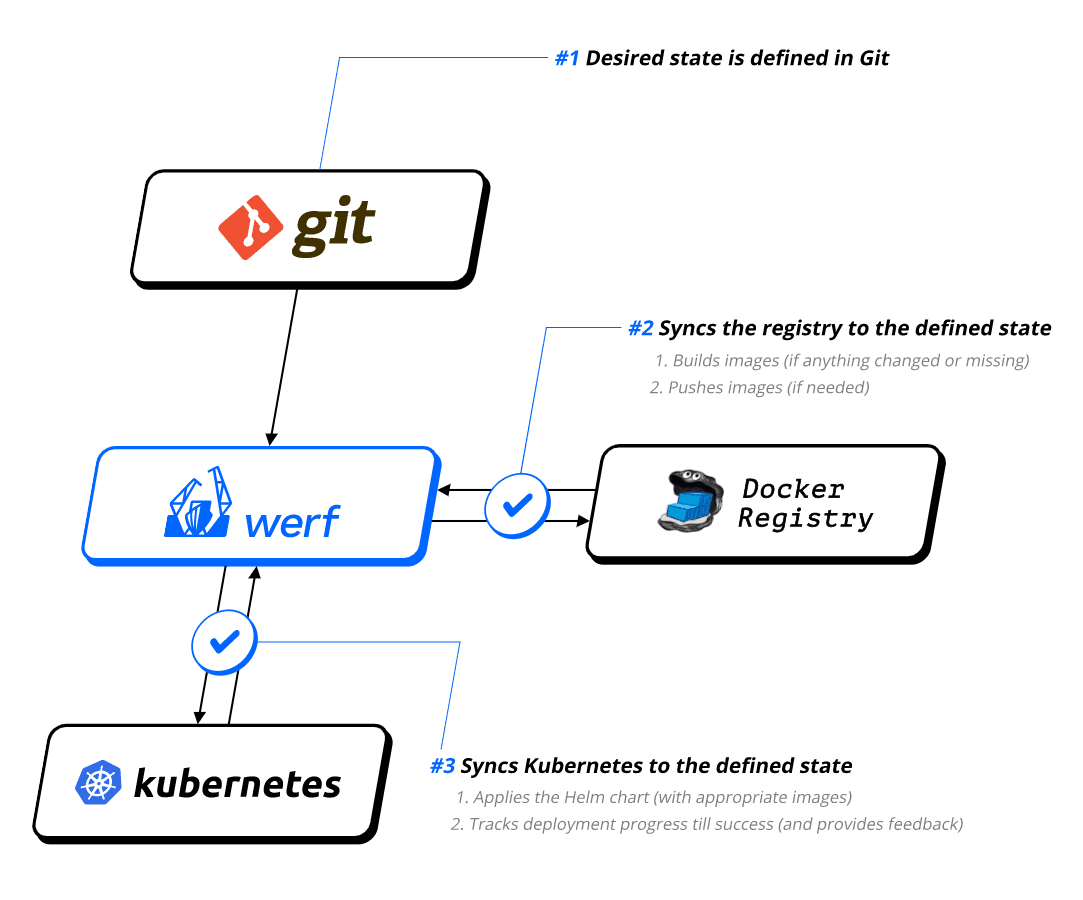

GitOps is defined as a way of managing Kubernetes infrastructure and applications, in which git repositories hold the declarative definition of what has to be running in Kubernetes. A git repository is considered as the single source of truth in the GitOps model. With this approach, changes in the application definitions (code), are reflected on the infrastructure. Besides, it is possible to detect differences and drifts between the source controlled application and the different versions deployed.

GitOps allows faster delivery rates, as once the code is pushed to the production branch, it will be present in the cluster in a matter of seconds, increasing productivity. This Model also increases reliability, as rollbacks and recovery procedures are eased by the fact that the entire set of applications is control versioned by Git. Furthermore, stability and consistency is also improved.

Werf is an open source GitOps tool which aims to speed up application delivery by automating and simplifying the complete application life-cycle. To do so, Werf builds and publishes images, deploys applications to Kubernetes clusters, and removes unused images based on policies and rules defined in the Git repository.

Werf can build Docker images from Dockerfiles or using and alternative builder, which uses a custom syntax, and can support Ansible as well as incremental rebuilds based on Git history.

Werf deploys applications to Kubernetes using Helm-compatible charts with more extensive customizations. Furthermore, it provides roll-out tracking, error detection and logging mechanisms for the application releases. The logic driving these mechanisms can be customized by means of Kubernetes annotations.

Werf is distributed as CLI written in go, so it can run virtually in any modern OS and it can be easily integrated with any CI/CD platform, as it has been developed to do so.

Installing Werf 💻

In order to install Werf CLI, first you need to install Docker and Git in your machine. In case you haven’t installed them yet, you can find the installation instructions for Docker here, and for Git here. Once these dependencies are present, it’s time to install Werf.

Werf advises to install their CLI by means of the multiwerf utility, which ensures that the Werf CLI is always up to date. Execute the following commands to get it installed in your machine.

Select the Werf version to use and configure your terminal session to use that version by issuing the following command. Werf provides 5 stability levels to choose from, so that you can choose a level depending on your environments and needs.

In case you don’t want to use multiwerf features, you can just follow the ‘old good’ approach and get a specific version of the Werf CLI binary form here.

Project structure 📁

Werf expects files to be organized following a specific structure within the code repository. The image below shows the structure of the example repository that we will be using for this post, which contains a simple webpage (in /web) that is packaged in a nginx Docker image, and deployed to a Kubernetes cluster using Helm.

On the top level of the repository you can find werf.yaml, a YAML configuration file for Werf which defines how to build the images, and contains some metadata and directives that allow customizing the behavior of Werf. This file contains metadata information, images build information and artifacts build information each part separated by three hyphens. Werf also supports Go templates for the werf.yaml file, as you can notice in the last line of the following example, making configurations really powerful. Besides, by including different templates, werf.yaml can be split into different files, to better handle complex configuration scenarios.

At the same level you can find a Dockerfile, used to build the image. This Dockerfile can be directly referenced from werf.yaml to be used. However, Werf supports custom syntax to build images, directly specified within werf.yaml as you will see in the upcoming examples.

.helm directory contains the Helm charts to be used by Werf to deploy the application, organized following the Helm chart file structure. Notice that Chart.yaml is not included as it is not used by Werf.

Building images 📦

First, clone the examples repository to get started.

Building images from a Dockerfile is the easiest way to start using Werf in an already existing project. To do so, you will only need to add the dockerfile directive with the Dockerfile name (Dockerfile in this case) under the image config in your werf.yaml file.

The code below shows the contents of the Dockerfile. It is fairly simple, as it just adds the webpage and its media to the image and exposes the port 80 fort the nginx server to listen on.

However, Werf provides advanced features for image building, eliminating the need for Dockerfiles, although they can be combined with the Werf builder syntax. Besides, you can build as many different images as you need using just one Werf configuration file (multi-image build). The code below shows the contents of the werf.yaml file of the example repository.

In this case, the image is built using different Werf directives. First, Ansible syntax is used with the ansible directive, to modify and create some directories. Shell commands can be executed during the building phase with the shell directive, however, when using ansible directives, shell directives cannot be used and vice versa. Notice the commented shell directive as an example.

Blocks inside the ansible and shell directives are known as Werf assembly instructions. Werf provides four user stages for executing these instructions: beforeInstall, install, beforeSetup, and setup. They are executed respectively, one after another. If you want to know more about user stages and the wide range of possibilities they offer, check this documentation.

The git directive is used to add files from the local Git repository into the image, but it can be used to add files from remote repositories too, allowing you to specify the branch, tag or commit to use, as well as path-based filters. Besides, this directive can be used to trigger the rebuilding of images when specific changes are made inside a Git repository. To see a comprehensive description of the directive and its capabilities, check this documentation.

Finally, the docker directive is used following a Dockerfile-like syntax in order to build the image. You can check the details of this directive here.

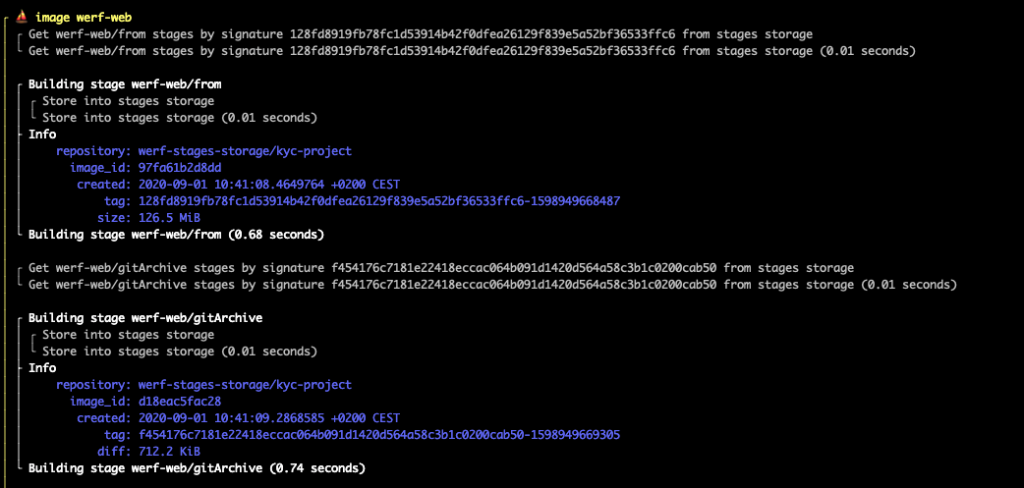

To build your docker image, you just need to execute the following command within the root level of your repository, so that the CLI can find the werf.yaml file. Note the –stages-storage flag, which indicates to Werf where to store the built stages, which are the intermediate layers that are used to generate the image. In this case, by using the local flag, Werf will store the stages using the local docker runtime.

During the build process, the different stages are executed and their outputs are shown in the terminal.

Once the image is built successfully, you can push it to an images repository. For this example I used a Dockerhub public repo for simplicity. If you don’t have an account yet, just sign up and create a public repository. Once it’s ready, generate an access token so that Werf can push the built images to the repository. To push the image, issue the following command.

TIP: If you prefer using a local Docker repository, rather than using Dockerhub, you can just run the following command, and specify –images-repo localhost:5000/your-repo-name when issuing the werf publish command.

TIP 2: You can build and publish your image by just using one command.

Werf and secrets 🔐

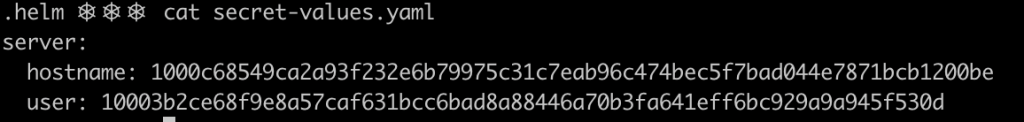

Werf also comes in handy when working with sensitive values and secrets in Helm templates. Werf allows you to encrypt values files so that when they are pushed to a repository no one could extract the original values but Werf. To do so, Werf uses a secret key, which is specified using the WERF_SECRET_KEY variable or the .werf_secret_key file in the project root.

Generate a secret key and configure it as an environment variable for your shell session.

Use Werf to generate the secret values file. This command will open a text editor so that you can create or modify the values.

Paste the following set of test values into the editor, then save your changes.

If you try to read the content of the .helm/secret-values.yaml you will notice that the values have been encrypted, so now you’re safe to store this secret values in your repository.

The secret values can be referenced as any other Helm value within the templates. For this example, they are injected in the container as environment variables.

Deploying to K8s ☸️

As commented before, Werf can use Helm templates to deploy applications to Kubernetes clusters. Werf searches for templates within .helm/templates and renders them as Helm would normally do. Besides, Werf extends Helm functionalities by including three-way merge patches as well as resource tracking annotations.

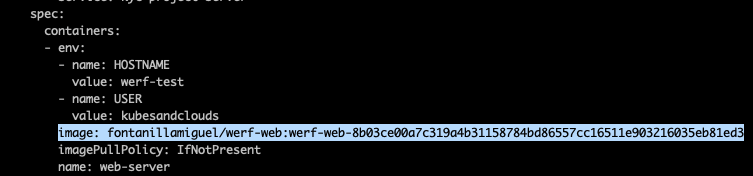

The code below shows the contents of .helm/templates/deployment.yaml, which uses the image previously build to deploy a three-replica web server in Kubernetes.

Notice how the go template functions werf_container_image and werf_container_env are used in the deployment manifest. These functions are evaluated when rendering the chart and generate the keys image and imagePullPolicy based on the tagging strategy, and the environment variable DOCKER_IMAGE_ID, respectively.

Like Helm, Werf uses the .helm/requirements.yaml file to define chart dependencies, like the nginx ingress controller in this case, to expose the web server. To build the dependencies, issue the following command.

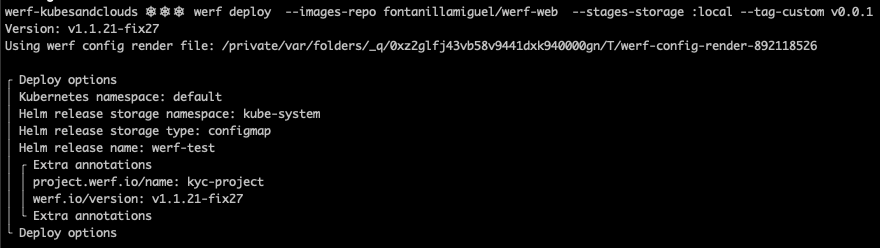

Once the dependencies have been fetched, deploy the application to your cluster. Werf uses the system default kubeconfig through the environment variable KUBECONFIG, but you can specify other kubeconfig by specifying its path with the –kube-config flag. The following command triggers the deployment process.

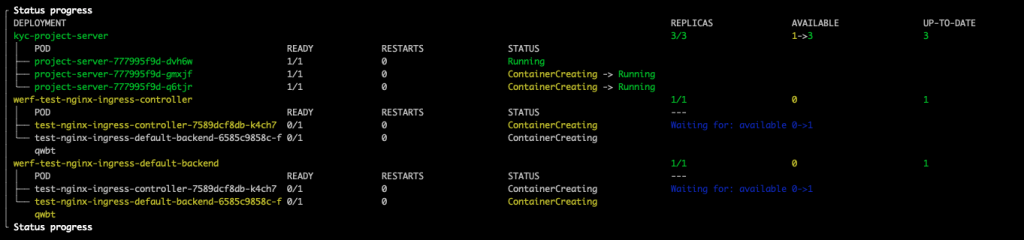

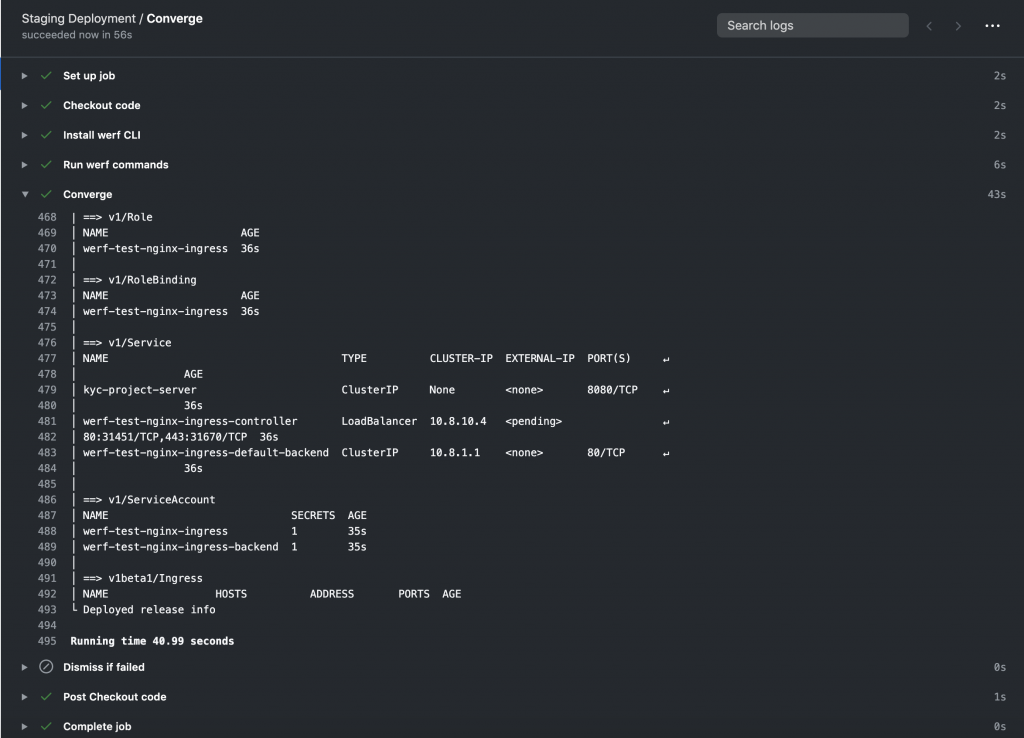

While Werf is performing the application deployment by means of Helm, the status progress is shown for all the components that are being deployed.

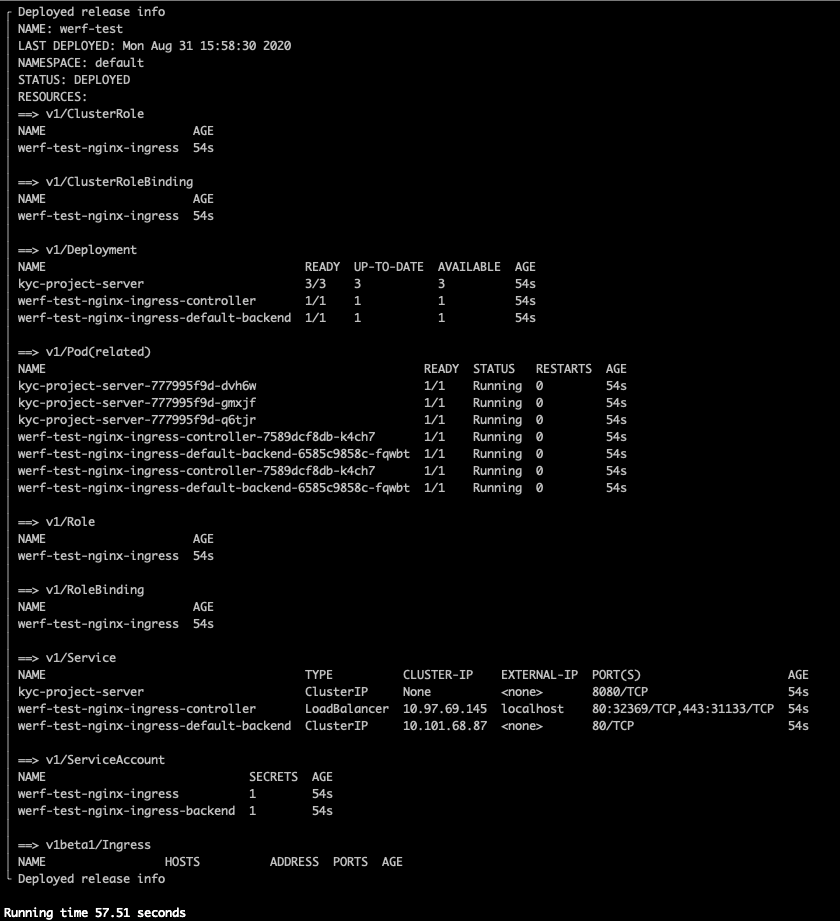

Once the deployment has been completed, a summary is displayed.

If you deployed your application in your local cluster using Minikube or Docker desktop, execute the following command so that you can access your application through the ingress.

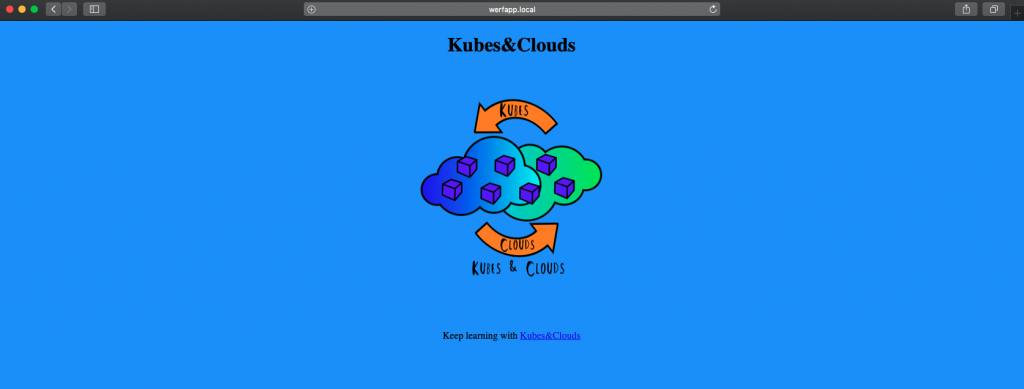

If everything worked out, you should be able to see the example webpage displaying a pretty cool logo 😏 .

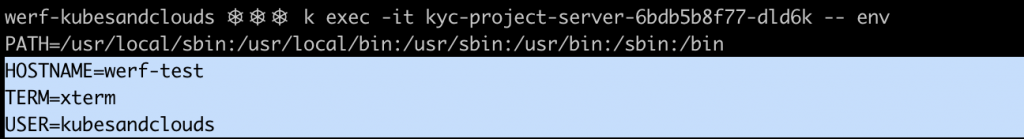

By the way, remember those super secret values we injected as environment variables? If you check the environment variables of the container within one of the pods, you can see how the values worked as expected.

TIP 3: The converge command combines werf build, werf publish and werf deploy commands. Notice that the –custom-tag flag is not used in this command, as the image tag is generated by Werf.

Cleaning up 🧹

Once you’re done playing around with the example application, it’s time to remove the release and their associated resources. By executing the following command, the Helm release is removed from the cluster.

In order to remove both the images pushed to the docker repository as well as the intermediate stages generated, issue the following command. Notice that for dockerhub repositories, both username and password need to be specified to delete images.

TIP 4: The cleanup command can be used to delete unused images and stages. It is a good practice to run this command daily to keep just those images which are in use.

CI/CD integrations 🐙🐱

As you have seen, Werf is a pretty powerful tool, and it makes sense to integrate it in a CI/CD environment to improve the overall automation and application lifecycle management. Integrating Werf is pretty simple if done ‘manually’, since it’s shipped as a CLI, and its behavior can be configured by means of environment variables. Furthermore, Werf provides a command, ci-env, which detects the CI/CD system configuration and store it in the environment variables, making it even easier.

Besides, native integrations have been developed both for GitLab CI and GitHub Actions. The code below shows an example using actions provided by Werf to deploy into a Kubernetes cluster when new changes are pushed to the deploy branch using GitHub actions. The action werf/actions/converge@master executes the werf converge command, building the images and deploying the application to the cluster pointed by the kubeconfig stored as a secret in KUBE_CONFIG_BASE64_DATA.

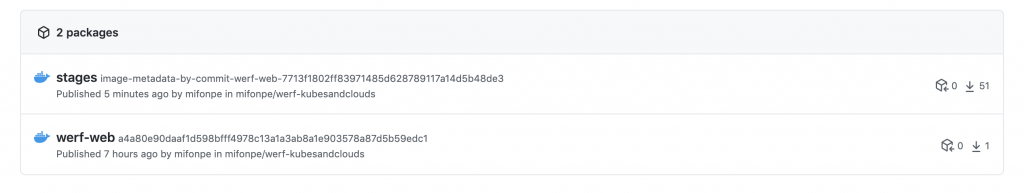

If you want to try this integration, you can fork the project and switch to the deploy branch which is already configured to be used with GitHub actions. In this case, if no Docker registry is specified, Werf will use GitHub packages as an image repository to store both the intermediate stages and the images.

In order to allow your Kubernetes cluster to pull images from this registry, you will need to create a Docker credentials secret specifying your username and a valid GitHub Personal Access Token, and then add the service account to use (the default in this case).

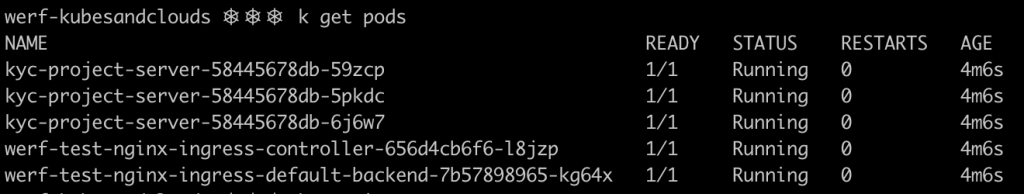

If everything goes well, by the end of the job, Werf would have deployed the entire application in the cluster.

TIP 5: To obtain your kubeconfig in base64 you can use the following command (assuming your local kubeconfig points to the remote cluster to use).

Thanks to these previously developed actions, integrating Werf in your existing CI/CD environment can be easier than you thought!

Keep learning 👩💻👨💻

If you liked Werf, take some time to go through its documentation, as you will better understand all the commands and options offered by the CLI, as well as all the possible integrations with CI/CD environments.

Besides, this documentation introduces advanced used cases that are really interesting as they present complex scenarios that resemble productive application environments and issues. Some of the most interesting use cases are the following ones:

.jpeg)